-

Computility

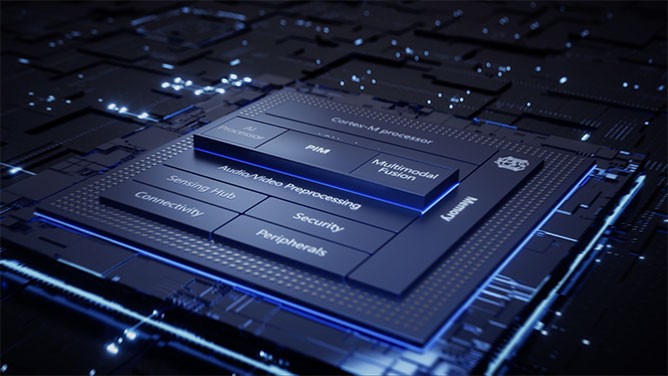

The N300 provides computility of 0.5 TOPS in single-core and a Cluster architecture composed of multiple NPU cores.

-

Accuracy

The N300 supports mixed-precision computing, including integer computation at 4-bit, 8-bit, and floating-point computation at 16-bit, which enables a better balance between energy consumption, computility density, and computation accuracy.

-

Flexibility

The high-speed task scheduling acceleration unit supports real-time task scheduling for multi-core or multiple computing units.

-

Compatibility

The N300 supports custom operators to meet the deployment needs of various models, and provides configuration schemes and specific optimizations for application scenarios such as voice activity detection, eye-tracking, active noise control, and environmental perception.

-

Software and toolchains

To meet customers' needs for more autonomous and flexible algorithm migration, the N300 have opened up NPU intermediate representation layer specifications, model parsers, model optimizers, drivers, etc., and provided a free software toolchain, including software simulators, debuggers, and C compilers.

-

Supported Networks

MobileNet, ResNet, Yolo-v2/v3, UNet, ShuffleNet, SqueezeNet, EfficientNet, LSTM, and networks that can be decomposed into combinations of the above operators.